NVIDIA-Powered Robust AI Clusters

Accelerate your AI workloads with reliable NVIDIA GPU clusters on Kobayashi AI Cloud. Leverage bare-metal performance from the latest Blackwell & Hopper systems (connected via non-blocking NVIDIA InfiniBand) — all within a secure, fully virtualized cloud environment.

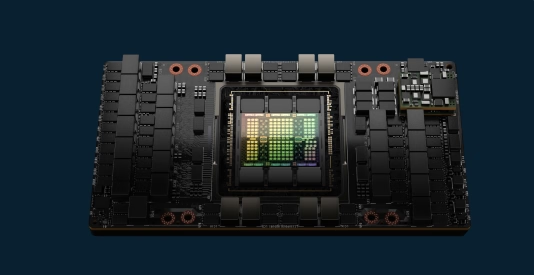

NVIDIA HGX B200

Air-cooled systems optimized for building & running reasoning LLMs, multi-modal models, and agentic AI.

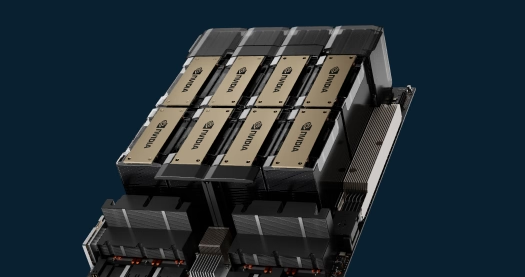

NVIDIA HGX H200

Extended GPU memory for consistent, predictable performance in LLM & multi-modal training/inference.

NVIDIA HGX H100

Cost-effective, robust GPU compute for large-scale foundational model building & serving.